Virtualisation with Xen and openQRM on Debian

This How-To explains creating and managing Xen Virtual Machines on Debian with openQRM. This is the first How-To which requires 2 systems and it shows how to integrate additional, existing, local-installed server into openQRM by the example of adding a Xen Host into an existing openQRM environment.

Requirements

- Two physical Server. Alternatively openQRM itself can be installed within a Virtual Machine

- at least 1 GB of Memory

- at least 100 GB of Diskspace

- VT (Virtualization Technology) enabled in the Systems BIOS so that the system(s) can run Xen HVM Virtual Machines later

- Minimal Debian Wheezy Installation on a physical Server

- Install and initialize openQRM

NOTE

- If you do not already have openQRM fully installed, follow this How-To to do so

- For this How-To, we also assume you are using the same openQRM server (updated to version 5.1.3) as for the How-To about 'Virtualisation with KVM and openQRM on Debian' and 'Automated Amazon EC2 Cloud deployments with openQRM on Debian'. That means with this How-To we are going to add functionality to an existing openQRM setup. This is to show that openQRM manages all different virtualisation and deployment types seamlessly.

Set a custom Domain name

Skip this if you've already done this step in a previous How-To

As the first step after the openQRM installation and initialization it is recommended to configure a custom domain name for the openQRM management network.

In this Use-Case the openQRM Server has the private Class C IP address 192.168.178.5/255.255.255.0 based on the previous How-To install openQRM 5.1 on Debian Wheezy. Since the openQRM management network is a private one any syntactically correct domain name can be used e.g. 'my123cloud.net'.

The default domain name pre-configured in the DNS plugin is "oqnet.org".

Best practice is to use the 'openqrm' commandline util to setup the domain name for the DNS plugin. Please login to the openQRM Server system and run the following command as 'root' in a terminal:

/usr/share/openqrm/bin/openqrm boot-service configure -n dns -a default -k OPENQRM_SERVER_DOMAIN -v my123cloud.net

The output of the above command will look like

root@debian:~# /usr/share/openqrm/bin/openqrm boot-service configure -n dns -a default -k OPENQRM_SERVER_DOMAIN -v my123cloud.net

Setting up default Boot-Service Configuration of plugin dns

root@debian:~#

To (re)view the current configuration of the DNS plugin please run:

/usr/share/openqrm/bin/openqrm boot-service view -n dns -a default

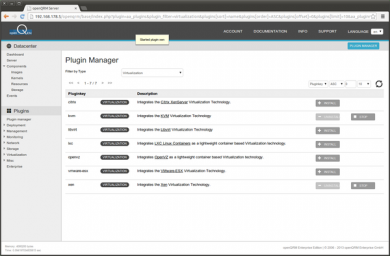

Enabling Plugins

In the openQRM Plugin Manager please enable and start the following plugins in the sequence below:

- dns plugin - type Networking

- dhcpd plugin - type Networking

- tftpd plugin - type Networking

- network-manager plugin - type Networking

- local-server plugin - type Misc

- device-manager plugin - type Management

- novnc plugin - type Management

- sshterm plugin - type Management

- linuxcoe plugin - type Deployment

- xen plugin - type Virtualization

Hint: You can use the filter in the plugin list to find plugins by their type easily!

Install Debian Wheezy on the second system dedicated for the Xen Host

Install a minimal Debian Wheezy on the second physical Server.

During the installation select 'manual network' configuration and provide a static IP address. In this How-To we will use 192.168.178.6/255.255.255.0 as the IP configuration for the openQRM Server system.

In the partitioning setup please select 'manual' and create one partition for the root-filesystem, one as swap space plus a dedicated partition to be used as storage space for the Virtual Machines later. In the configuration of the dedicated storage partition select 'do not use'.

In the software selection dialog select just 'SSH-Server'

After the installation finished please login to the system and update its packaging system as 'root'

apt-get update && apt-get upgrade

Then install the Xen Hypervisor plus its package dependencies by the following command:

apt-get install xen-linux-system-amd64

To set the Xen Hypervisor as the default kernel in Grub please edit "/etc/default/grub" and update

GRUB_DEFAULT=0

to

GRUB_DEFAULT=2

After a regular Debian Installation the Xen Hypervisor is normally the third boot option in /boot/grub/grub.cfg. Counting from 0 the GRUB_DEFAULT should be set to 2). Then run:

update-grub && reboot

to update the Grub boot-loader and to reboot the system into the Xen Hypervisor.

After reboot login again to the second system and ensure that the system has booted the Xen Hypervisor by running:

xm list

The output of this command should be similar to the following:

root@debian:~# xm list

Name ID Mem VCPUs State Time(s)

Domain-0 0 3514 2 r----- 20.9

root@debian:~#

Enable VNC access to the Virtual Machine console on the Xen Host

To enable VNC access to the Virtual Machine console on the Xen Host please edit '/etc/xen/xend-config.sxp' and adapt the 'vnclisten' parameter. Uncomment and update it to:

vnclisten="0.0.0.0"

Then restart Xen to activate the new VNC configuration.

/etc/init./xend restart

Integrate the Xen System into openQRM via the "local-server" plugin

Copy (scp) the openqrm-local-server integration tool from the openQRM server to the second System dedicated for the Xen Host:

scp /usr/share/openqrm/plugins/local-server/bin/openqrm-local-server 192.168.178.6:

Then login (ssh) to the second System and run the openqrm-local-server tool with the 'integrate' parameter:

./openqrm-local-server integrate -u openqrm -p openqrm -q 192.168.178.5 -n xen -i eth0 -s http

Here how it looks like on the seconds system terminal console:

root@debian:~# ./openqrm-local-server

Usage : ./openqrm-local-server integrate -u <user> -p <password> -q <ip-address-of-openQRM-server> [ -n <hostname> ] [-i <network-interface>] [-s <http/https>]

./openqrm-local-server remove -u <user> -p <password> -q <ip-address-of-openQRM-server> [ -n <hostname> ] [-s <http/https>]

root@xen1:~# ./openqrm-local-server integrate -u openqrm -p openqrm -q 192.168.178.5 -n xen -i eth0 -s http

Integrating system to openQRM-server at 192.168.178.5

-> could not find dropbear. Trying to automatically install it ...

Reading package lists... Done

Building dependency tree

........... (more output and automatic package installation)

root@debian:~#

Create the Virtualization Host Object

NOTE: In this How-To we will name this second, integrated system as 'Xen Host'. In the openQRM UI the Xen Host is named 'xen'

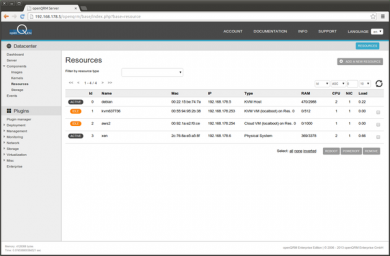

The integration via the 'local-server' plugin created a new resource object for the Xen Host in openQRM.

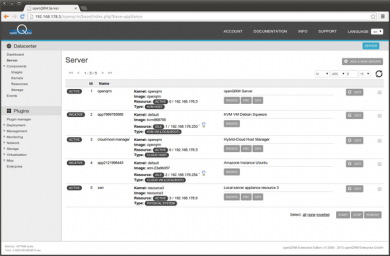

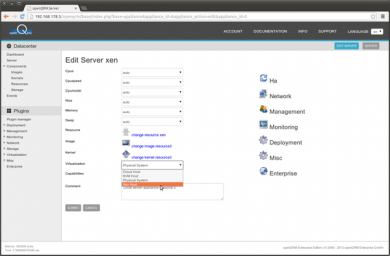

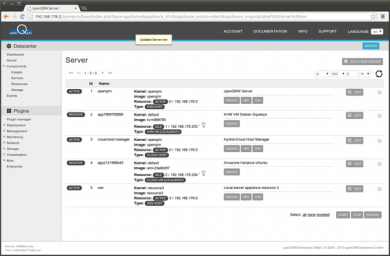

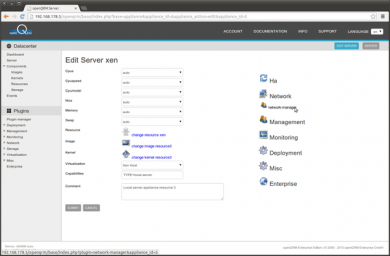

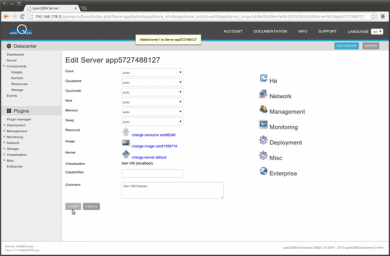

It also created a new server object for the integrated Xen Host to allow further management. Go to Datacentre -> Server and click on 'Edit' of the Xen Host.

In the following Edit dialog please set 'Xen Host' as the Virtualization type of the second server, then submit to save

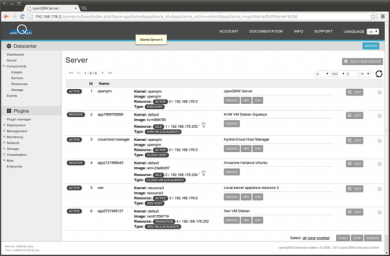

This is the Server list after configuring the Virtualization Host Object

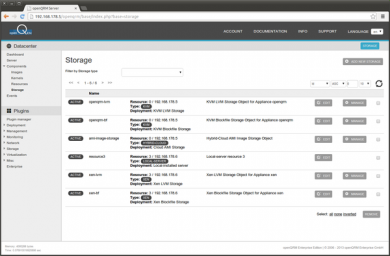

Additionally 2 Xen Storage Objects are automatically created to manage different types of VM volumes

Create a LVM Volume Group

The Xen Storage type with the best flexibility regarding volume management is 'Xen LVM Storage'. Each VM gets its own dedicated logical volume as its root image. Alternatively 'Xen Blockfile Storage' can be used. Volumes for this storage type are created on the Hosts filesystem via the qemu-img command. This How-To describes the 'Xen LVM Storage' type.

Go to Datacentre -> Storage and click on the 'manage' button of the 'xen-lvm' storage object

NOTE: The system automatically checks if the system utilities for the LVM functionalities are available. If not it openQRM triggers to automatically install them via the distribution package manager. This may delay the first time the command is executed on the system.

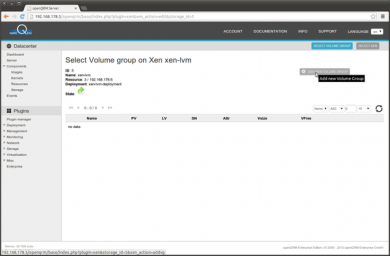

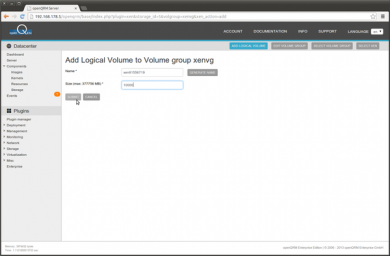

Now click on 'Add new Volume group'

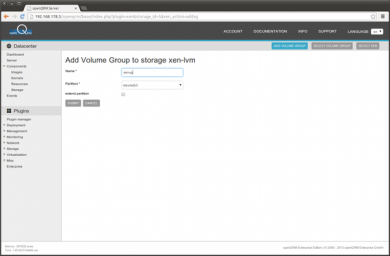

When you have followed the partitioning setup in the seconds system Debian installation there is now a dedicated /dev/sda3 partition available which can be used to create a LVM Volume group. Please provide a name for the LVM Volume group (here 'xenvg'), select the /dev/sda3 device and submit.

Please notice: On the Xen Host for this How-To we are going to use /dev/sdb3! If you have installed on a single disk your choice will be still /dev/sda3.

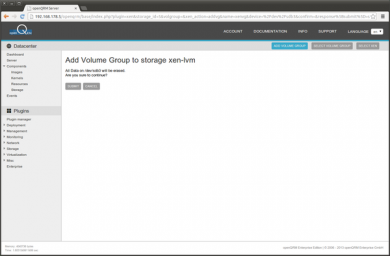

Confirm to create the new Volume group

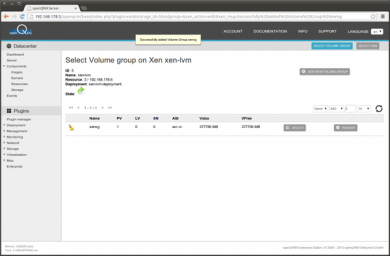

The LVM Volume group to store the VM Images is created now

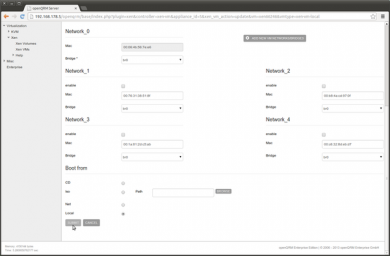

Create one or more network bridges

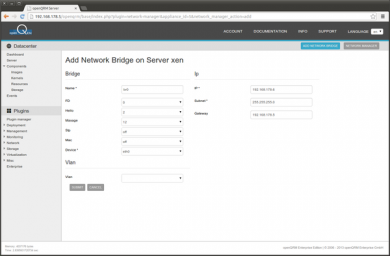

The Xen Virtualization technology is using network bridges to connect the Virtual Machines virtualized network interfaces to real networks.

Go to Datacentre -> Server and click on 'Edit' of the Xen Host server object

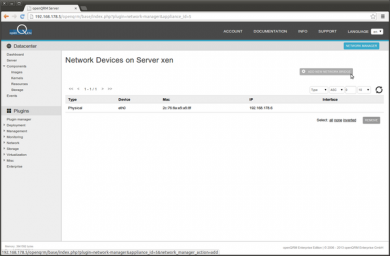

In the server edit section please select Network -> Network Manager.

This will provide a list of all available network interfaces on the system.

NOTE: The system automatically checks if the system utilities for the bridge and network functionalities are available. If not it openQRM triggers to automatically install them via the distribution package manager. This may delay the first time the command is executed on the system.

Please click on 'Add new network bridge' to create a new network bridge

Fill in 'br0' as the bridge name, select eth0 (the Xen Hosts management network interface) and insert the same IP configuration as for eth0. In this How-To the IP 192.168.178.6/255.255.255.0 is used with a default gateway 192.168.178.5.

Then click on submit to create the new network bridge.

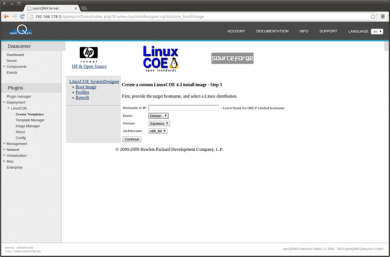

Create a LinuxCOE automatic-installation template

The LinuxCOE Project provides a useful UI to create automatic-installation ISO images for various Linux distribution e.g. preseed, kickstart and autoyast. Those ISO images can be then used to fully automatically install a Linux distribution without any manual interaction needed.

The integration of LinuxCOE in openQRM makes those automatic-installation ISO images automatically available on all Virtualization Hosts (mounted by nfs at /linuxcoe from the openQRM server). This makes it easy to configure a Virtual Machines installation boot image from the central ISO Pool mount point.

NOTE: The LinuxCOE plugin in openQRM comes with a fully automatically setup and pre-configuration of LinuxCOE. Since LinuxCOE is an installation-framework it is recommended to add further custom configuration such as local package mirrors, new distribution data and config files etc. Please read more about how to further enhance your LinuxCOE installation at http://linuxcoe.sourceforge.net/#documentation

First step is to create a new automatic-installation profile and ISO image.

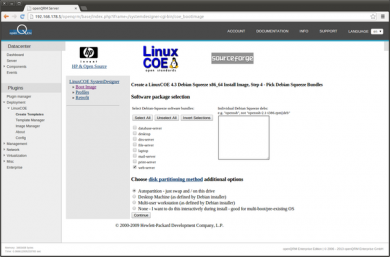

Go to Plugins -> Deployment -> LinuxCOE -> Create Templates and select a Linux distribution and version for the automatic-installation. For this How-To we will use 'Debian Squeeze 64bit'. Please leave the hostname input empty since openQRM will care about this via its dhcpd plugin.

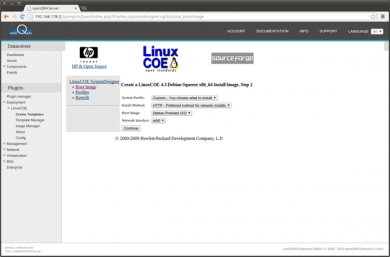

Leave the default settings on the next page of LinuxCOE wizard.

Next select a Mirror from the list.

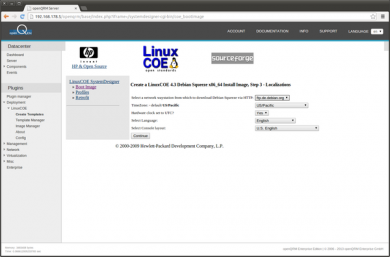

Here provide your custom package setup for the automatic-installation.

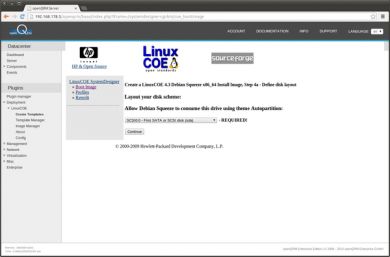

On the following page leave the default settings.

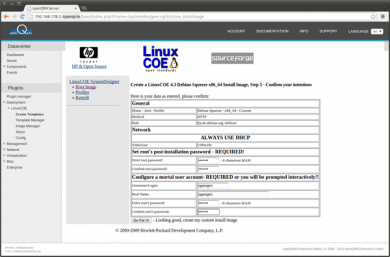

The summary page of the LinuxCOE wizard allows you to preconfigure a root and user account. Click on 'Go for it' to create the automatic-installation template.

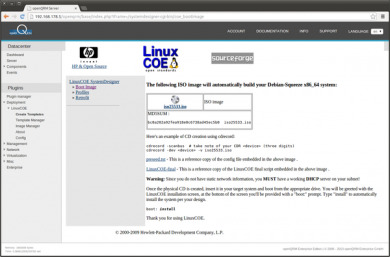

The ISO image is created. No need to download it since it will be used directly by the Xen Host for a Xen Virtual Machine installation from a central '/linuxcoe-iso' NFS share on the openQRM server.

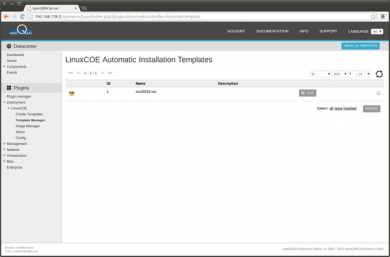

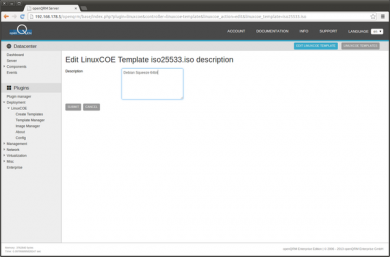

Go to Plugins -> Deployment -> LinuxCOE -> Template Manager and click on 'Edit'.

Now provide a description for the just created automatic-installation template.

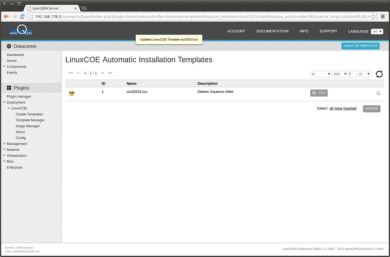

Here's the list view of the updated automatic-installation template.

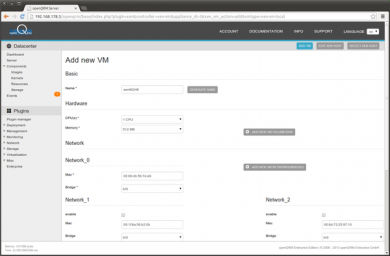

Create a new Xen Virtual Machine

Use openQRM's Server Wizard to add a new Xen Virtual Machine. This Wizard works in the same way for physical systems, KVM VMs, Xen VMs, Citrix XenServer VMs, VMware VMs, LXC VMs and openVZ VMs.

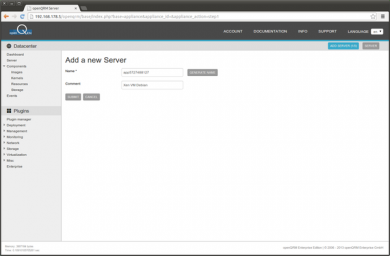

Go to Datacenter -> Server -> Add a new Server

Please give a name for the new server. Easiest is to use the 'generate name' button. Also provide a useful description.

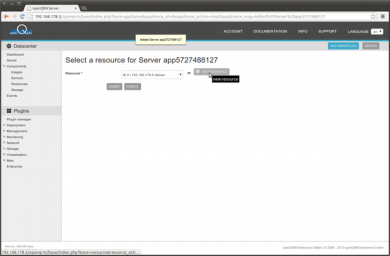

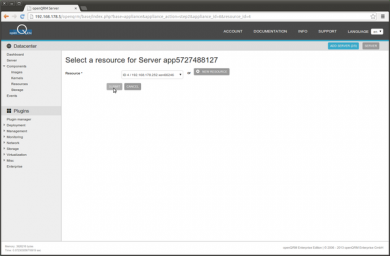

In the Resource-Selection please click on 'new resource'. A resource in openQRM is a logical generic object which is mapped to a physical system or Virtual Machine of any type.

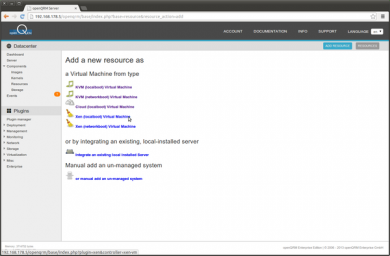

On the next page find a selection of different resource types to create. Please choose 'Xen (localboot) Virtual Machine'

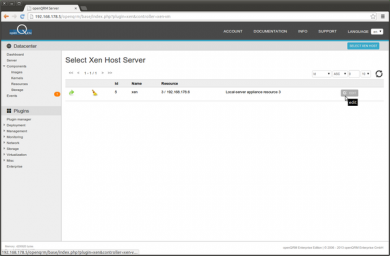

This forwards to the Xen Host selection. Please select the Xen Host as the Virtualization Host of the VM

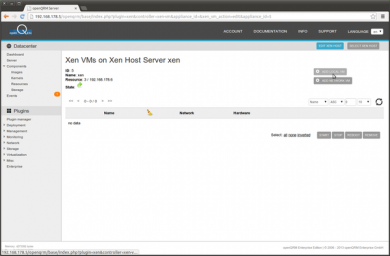

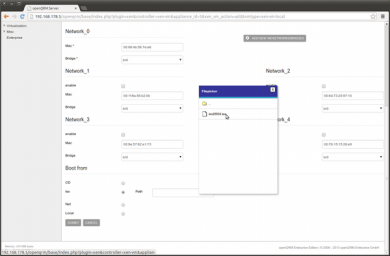

On the Xen Virtual Machine Overview please click on 'Add local VM'

In the VM add form provide a name for the new VM. Again the easiest is to use the 'generate name' button. There are lots of different parameters which can be configured. Anyway you can go with most of the default selection.

Further down the VM add form please configure the boot sequence of the VM. Select 'iso' and open the Filepicker by clicking on the 'Browse' button. This will open a small new window listing the filesystem of the Virtualization Host. Navigate to '/linuxcoe-

Creating the new VM automatically forwards back into the server wizard with the new created resource available. Select the new resource and 'Submit'

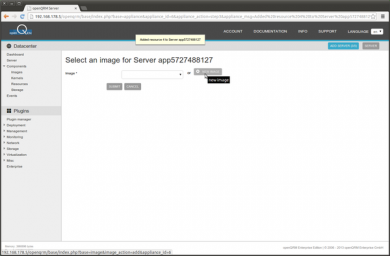

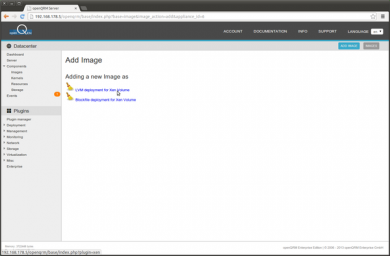

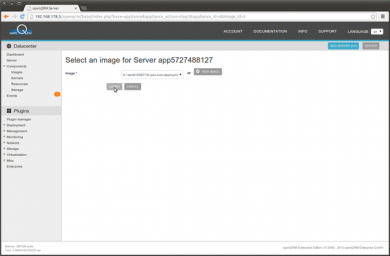

We haven't created an Image for the Virtual Machine yet. An Image in openQRM is a logical and generic object which is mapped to the physical volumes of different storage types. So click on 'New Image'

Adding a new Image forwards to the Image type selection. Please select 'LVM deployment for Xen volume'

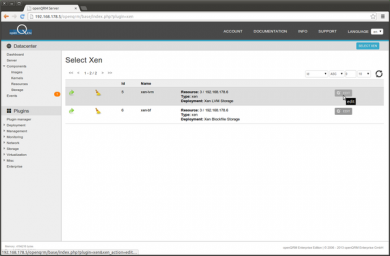

In the storage server selection please select the 'xen-lvm' storage object

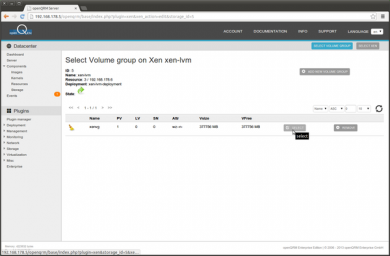

Then select the 'xenvg' LVM volume group

In the LVM volume overview click on 'Add new logical volume'

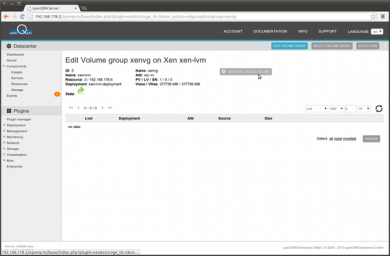

Provide a name and size (in MB) for the new volume. Again the easiest is to use the 'generate name' button. Then 'Submit'

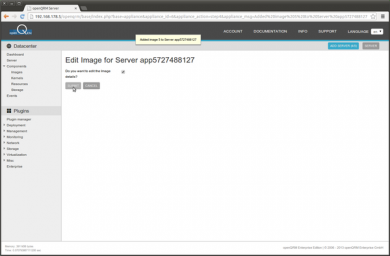

Creating the new Image automatically forwards back into the server wizard with the new created image available. Select the new image and 'Submit'

Click on 'Submit' to edit the Image parameter

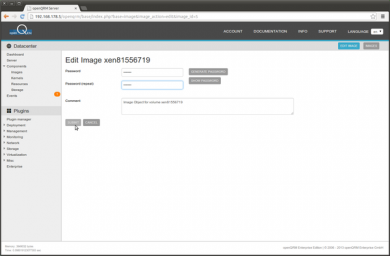

This following step allows to edit further Image parameter e.g. setting a root password

The last step in the server wizard presents the full configuration and allows to further setup network, management, monitoring and deployment configuration. Click on 'Submit' to save the server configuration.

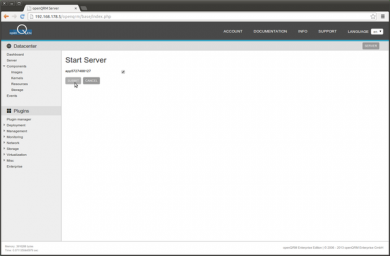

The server overview list the new server, not yet activated. Please select the new created server and click on 'Start'

Confirm starting the server

Started the new server, now marked as 'active'. Starting the logical server object triggers to actually start the resource (the Xen VM) with the configured Image (the LVM volume) and triggers additional automatic configuration tasks via a plugin-hook. This server start- and stop hooks are "asking" each activated plugin if there is "some work to do". For a few examples how hooks are used in openQRM please check the list below:

- The DNS plugin is using those hooks to automatically add (or remove) the server name into the managed bind server

- The DHCPD plugin add the "hostname option" for the server to its configuration

- The Nagios plugin adds/removes service checks for automatic monitoring

- The Puppet plugin activates configured application recipes to automatically setup and pre-configure services on the VM

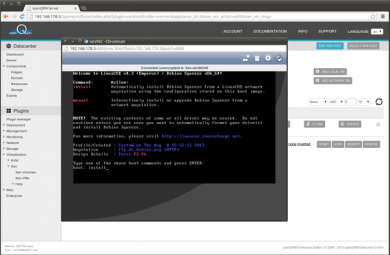

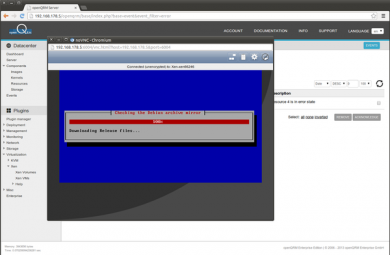

Go to Plugins -> Virtualization -> Xen -> Xen VMs and select the openQRM server. In the Xen VM overview click on the 'console' button of the VM. This opens a VNC console within your web browser.

Please notice! You need to deactivate the browsers Pop-up Blocker for the openQRM website!

To start the automatic installation please type 'install' in the VNC console and press ENTER.

The Xen VM is now automatically installing a Debian Linux distribution. Good time for you to grab a coffee!

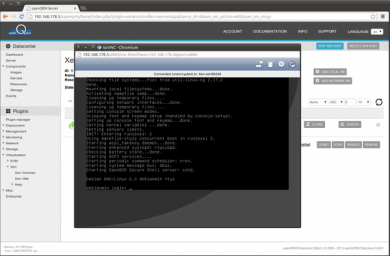

Please notice! After the automatic installation via the attached LinuxCOE ISO image the VM reboots to the install screen again.

We now have to re-configure the VMs boot-sequence to 'local-boot'. To do this please follow the steps below:

- Stop the VM by stopping its server object - Datacentre -> Server -> Select + Stop

- In Plugins -> Virtualization -> Xen -> Xen VMs select the Xen Host and update the VM to boot 'local'

- Now start the VM again by starting its server object - Datacentre -> Server -> Select + Start

Here's a screenshot of the completed Debian installation after setting the boot-sequence of the VM to 'local'

Install the 'openqrm-local-vm-client'

Now it is recommended to install the 'openqrm-local-vm-client' on the fresh installed system. For local-installed Virtual Machines (e.g. kvm(local VM), xen(local VM), lxc(local VM), openvz(local VM) which have access to the openQRM network the 'openqrm-local-vm-client' activates the plugin-client-boot-services to allow further management functionality (e.g. application deployment with Puppet, system statistics with Collectd etc). Monitoring and openQRM actions are still running on behalf of the VM Host.

To install the 'openqrm-local-vm-client' on VM please follow the steps below:

- Copy the 'openqrm-local-vm-client' utility to the running VM

cd /usr/share/openqrm/plugins/local-server/web/local-vm/ && scp openqrm-local-vm-client [ip-address-of-the-VM]:/tmp/

- Then login to the VM

ssh [ip-address-of-the-VM]

- This prompts for the password which was configured in the LinuxCOE automatic-installation template. Please give the password and execute the openqrm-local-vm-client utility

/tmp/openqrm-local-vm-client

This will automatically setup the 'openqrm-local-vm-client' in the system init and start it.

Create additional Xen VMs by cloning/snapshotting the Volume

Go to Plugins -> Virtualization -> Xen -> Xen Volumes and select the 'xen-lvm' storage object. Then choose the 'xenvg' logical volume group to get a list of all available LVM volumes.

In this view you can use the 'clone' or 'snap' action to clone or snapshot existing, installed Xen LVM Volumes. The 'clone' action creates a new logical volume and copies the content of the origin over. Snapshotting is using the "copy-on-write" mechanism of LVM which is much more efficient. A deployed snapshot of a Xen LVM Volume just stores the changes compared to its origin. That means that creating snapshots e.g. per user allows to 'just' store the data which is different per user.

Congratulations!

You've successfully installed Xen Virtualisation on openQRM!